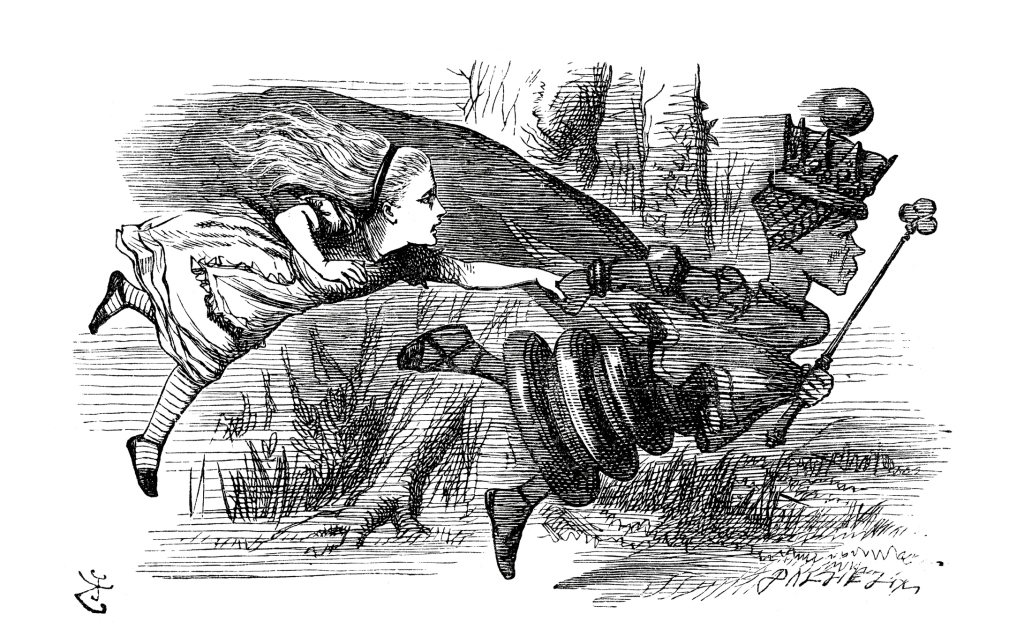

“Well, in our country,” said Alice, still panting a little, “you’d generally get to somewhere else—if you run very fast for a long time, as we’ve been doing.”

“A slow sort of country!” said the Queen. “Now, here, you see, it takes all the running you can do, to keep in the same place. If you want to get somewhere else, you must run at least twice as fast, as that!” —Through the Looking-Glass and What Alice Found There, Chapter 2, Lewis Carroll

There have been a number of high profile examples over the last several years concerning project management failure and success. For example, in the former case, the initial rollout of the Affordable Care Act marketplace web portal was one of these, and the causes for its faults took a while to understand, absent political bias. The reasons, as the linked article show, are prosaic and basic to the discipline of project management.

(more…)

You must be logged in to post a comment.